If you are interested in making your own SinteMouse, here is a step-by-step tutorial I wrote for simplicity.

Http://www.instructables.com/id/Bend-an-Optical-Mouse-to-hear-surface-textures/

The editors of instructables liked the tutorial, they put it featured on the main page and they gave me "pro membership" for a year :)

Several thousand visits! Positive feedback! New ideas suggested by readers! Cool!

A 1 minute video of one of this hacked mouses in action

https://www.youtube.com/watch?v=luZazXOj9Mc

Idea:

This circuit bending materializes my original premise of "listening to the textures of objects" that triggered the development of SoundPaint. Program for Java, in Processing language that tries to fulfill the premise by digital means, with the possibilities and limitations of that medium. ( Http://ignaciodesalterain.blogspot.com/2011/06/soundpaint.html )

The "real" surfaces were still in silence. After an unsuccessful attempt to intervene a barcode reader pencils for this purpose.

I made a question to myself while replacing an old wand barcode reader at work: "Can I hear the bar codes if I somehow connect the sensor of the pencil to a speaker?" "Similar to the hacked ball mouses, it would be a phototransistor of some kind connected to a speaker.."

Then I said to myself, "how about listening to any texture on any surface?... Woooaahh!"

Research process:

On December 13, 2011, I decided to continue researching my old optical mouse. I used it for years until I got a PS2, I changed it to free the USB port of my pc.

When I removed the plate I saw that it has a hole down that shows the "belly" of the chip, with navel and everything.

Behind the small hole there is a microcamera of very low resolution and high refresh rate. The pic shows the chip/board upside down, the hole in the board and the chip cover out, on the board.

I was investigating how it works. And how to intervene that camera to see the image on a pc, but it is complicated and the Instructable I read requires a specific chip.

Http://www.instructables.com/id/Mouse-Cam/

Anyway this does not solve my premise.

I found a Crazy Mouse that runs away when you want to grab it :)

Http://www.instructables.com/id/Crazy-Mouse/

For gamers, you can add "rapidfire" to the mouse click with a 555, or 40106.

Http://www.instructables.com/id/Add-a-rapid-fire-button-to-your-mouse-using-a-555-/

But I found nothing useful to my cause ...

So I kept on opening my path, cutting bushes, marking trees.

It's just the same pierced chip, it's "belly" and "back", without the cover and with it in place.

I pierced the chip with a wick of the diameter of the sensor, 5mm.

I put the cap back under the chip.

I glued the sensor in the hole on the top side, with the gun.

I cut the tracks that originaly fed current to the LED and weld cables and resistance of 270 Ohms.

And so I did the first test in which I tried to "listen" to the newspaper.

It did not work.

The LED light interferes the sensor behind, above the board.

The solution was to paint the entire back with matte black paint so that light only enters the hole under the mouse.

Three times to avoid any transparency.

Now the problem was the reverse, I could not completely saturate the sensor with the little light coming through the hole after bouncing on the paper / wood / cloth etc ...

The signal was barely audible, I connected it to the base of a transistor to amplify it (BC337).

At the output of the transmitter of the transistor is heard acceptably well ...

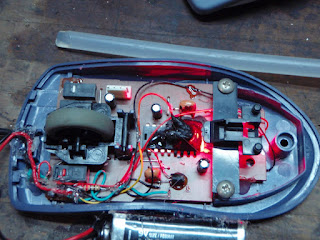

You can see the 337 with its three legs between the sensor and the 9V battery in the photo below.

When I made it work I started to move the mouse over everything around me and listen.

I accired a notion of "how a texture would sound", an interesting "pseudo-sinestesic" sensation so to speak.

A power like those of the X Men or Neo watching the Matrix, you know.

The cross on top of the mouse is exactly over the sensor, so I know where to aim, what am I hearing.

Scouting for the limits of the system I generated this image.

Some patterns attempt to generate square waveform (-_-_-_-_), other sawtooth (/////).

And sinusoid: the sinusoid oscillates rounded, similar to the movement of a pendulum, rises and falls gradually.

I generated patterns on different scales to see how high and low the pitch of the sound can be.

The device has a very fine resolution, I can hear the lines of a notebook sheet and thinner lines. Thin enough, I guess. Pierced surfaces like mosquito nets, combs or a protoboard are fun to hear.

I can not hear well some reflective surfaces like glossy paper or enamelled ceramics.

For some time I gave up on the background noise problem, the mouses work well enough to have fun with.

22/05/2017 Update:

I recieved some light sensors that should be more sensitive than the ones I can get at local shops. The ST-1CL3H was a game changer for my project.

Using those I no longer need a +9V,0V,-9V power supply, just a 5v stabilized transformer will work fine.

No transistor, no operational amplifier.

Just the sensor plugged to the 5v with a resistor and a cable to take the signal out of this.

Much better sound quality, and noise signal ratio.